Artificial intelligence has moved from theory to daily reality in law practice. Legal professionals now face a pressing question: how do we use these powerful tools without compromising the ethical foundations of our profession? The ethics of using AI in legal writing demands attention because the stakes are high—client confidentiality, accuracy of legal analysis, and the integrity of the judicial system all hang in the balance.

AI technology offers real benefits. It can speed up document review, improve legal research, and free lawyers to focus on strategy. But these advantages come with risks. From AI-generated fake citations to potential breaches of client data, the legal industry must address ethical implications head-on. The American Bar Association and state bars are racing to provide guidance, but lawyers can't wait for perfect rules before acting responsibly.

AI Tools and the Evolution of Legal Technology

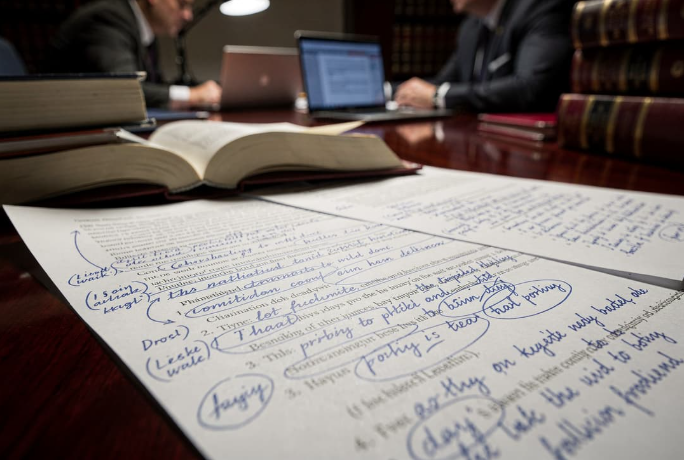

Legal technology has transformed rapidly. Generative AI tools now draft contracts, summarize depositions, and suggest legal arguments. These AI systems can analyze thousands of documents in minutes—work that once took associates days to complete.

But adoption requires due diligence. Before integrating AI-generated content into your workflow, you need to understand what you're working with. How was the AI model trained? Does it store your input? Can it access your client data? These aren't just technical questions—they're ethical ones.

The practice of law demands more than efficiency. When you use generative AI, you're still responsible for every word that goes into a brief or motion. AI tools should streamline legal tasks, not replace your judgment. Ethical issues arise when lawyers treat AI outputs as final work product instead of rough drafts requiring careful review.

Many legal professionals worry about data security. 23% of law firms experienced AI-related data incidents in 2024. That's not a small number. Each incident represents a potential breach of client confidentiality and professional conduct obligations.

Benefits of AI Systems in Legal Research and Analysis

AI systems excel at pattern recognition and data processing. In legal research, this means faster case law analysis and more comprehensive results. Predictive analytics can help assess likely outcomes in legal matters, giving clients better information for settlement decisions.

Document review has seen dramatic improvements. AI-generated insights can flag relevant passages, identify inconsistencies, and organize massive discovery productions. Lawyers spend 40% to 60% of their time drafting and reviewing contracts, and generative AI can free up significant time for more strategic work.

But speed without accuracy is worthless. Critical thinking remains essential. You can't outsource legal analysis to an algorithm and call it competent representation. The judicial system expects lawyers to understand their cases, not just copy-paste AI outputs.

Unauthorized practice concerns also emerge. When AI tools provide legal advice directly to consumers without lawyer supervision, they may cross ethical lines. Legal professionals must maintain control over legal tasks, even when AI assists with preliminary work.

Ethical Concerns: Bias, Training Data, and Confidentiality

Training data shapes everything AI produces. AI systems learn from historical information, which often contains societal biases. When algorithms perpetuate these biases, they can lead to discriminatory outcomes in legal claims and analysis.

Lawyers must audit AI outputs for bias. This isn't optional—it's part of providing competent representation. If your AI tool suggests language that disadvantages certain groups, you're ethically required to catch and correct it. Independent judgment protects clients from algorithmic discrimination.

Safeguarding client confidentiality presents another challenge. When you input case details into a cloud-based AI system, where does that information go? Model rules on professional conduct require strict protection of client information. California State Bar guidance emphasizes that lawyers should ensure AI providers don't share inputted information with third parties or use it for training purposes.

Ethical considerations extend beyond data security. You need to understand how AI models work. Can the vendor access your prompts? Do they retain copies of your documents? These questions determine whether you can ethically use a tool for legal matters involving sensitive information.

At BriefCatch, we address these concerns directly. We never store, retain, or use your text or documents—your content is processed in RAM only and promptly cleared. Your data will never be used to train AI models. This zero-retention approach aligns with ethical practices for handling client data.

Managing Unauthorized Practice of Law and Model Rules Compliance

The line between helpful AI and unauthorized practice of law can blur. AI tools that provide legal advice without lawyer supervision may violate regulations designed to protect consumers. Responsible AI use requires human oversight at every stage.

Professional judgment can't be delegated to software. Judicial officers and ethics committees have made this clear: lawyers remain accountable for all work product, regardless of how it was generated. You can use AI to draft a motion, but you must review, revise, and verify every assertion.

The regulatory landscape continues to evolve. State bars are issuing interim guidance while they develop comprehensive policies. Texas issued Opinion No. 705 in February 2025, providing specific guidance on generative AI use. Other jurisdictions will follow.

Competent representation means understanding your tools. If you don't know how your AI system works, you can't assess its reliability. This is where technological competence becomes an ethical obligation, not just a practical skill.

Ensuring Professional Conduct and Maintaining Competence

Maintaining competence in AI technology is now part of being a lawyer. The legal profession must adapt to new tools while preserving core values. This means continuous learning about AI capabilities and limitations.

Technological competence requires more than knowing how to use software. You need to understand when AI-generated content might be wrong. Stanford research found that leading legal AI tools hallucinate frequently—Westlaw's AI-Assisted Research produced incorrect information more than 34% of the time.

Remain vigilant about legal standards. Every AI-generated brief, contract, or memo must meet the same quality bar as human-drafted work. Professional conduct rules don't create exceptions for AI mistakes. You're responsible for catching errors before they reach clients or courts.

Legal work demands accuracy. More than 300 cases of AI-driven legal hallucinations have been documented since mid-2023, with at least 200 recorded in 2025. Courts are sanctioning lawyers who file AI-generated fake citations. These aren't isolated incidents—they're a pattern that demands attention.

Real-World Applications of Generative AI Tools

Generative AI tools work best for routine legal matters. Contract review, discovery organization, and initial research drafts are good use cases. These applications let legal professionals focus on complex analysis while AI handles repetitive tasks.

But AI usage must be balanced with human creativity and judgment. A law firm might use AI to generate a first draft of a motion, but lawyers must add case-specific arguments, refine the legal analysis, and ensure every citation is accurate. The AI provides a starting point, not a finished product.

Due diligence protects against ethical issues. Before deploying any AI tool, verify its security protocols, understand its data handling, and test its accuracy. Non-lawyers in your firm who use AI need training on these safeguards too.

The practice of law remains a human endeavor. AI can draft language, but it can't assess a judge's temperament, understand a client's true goals, or make strategic decisions about settlement. These judgment calls define legal practice and can't be automated.

Legal Education, Judicial Officers, and the Evolving Landscape

Legal education must prepare future lawyers for AI-enhanced practice. Law schools are beginning to integrate AI use into curricula, teaching students both the opportunities and the ethical constraints. This foundation will help the next generation of legal professionals navigate technology responsibly.

Judicial officers play a key role in setting standards. Courts are developing policies on AI use in chambers and by litigants. The Thomson Reuters Institute and National Center for State Courts launched AI Literacy for Courts, offering training for judges, clerks, and other court personnel.

To provide competent representation, lawyers must blend AI technology with human oversight. This means using AI to enhance efficiency while maintaining the critical thinking and professional judgment that clients deserve. Judicial officers expect lawyers to understand their cases deeply, not just present AI-generated arguments.

The evolving landscape of the legal profession demands flexibility. AI ethics will continue to develop as technology advances and regulators respond. Lawyers who stay informed and maintain ethical standards will be best positioned to serve clients effectively.

Moving Forward with Responsible AI in Legal Practice

Responsible AI starts with clear policies. Document how your firm uses AI, what safeguards you've implemented, and how you verify AI outputs. This protects both clients and your practice.

Safeguarding client confidentiality requires choosing vendors carefully. Look for SOC 2 certification, encryption standards, and explicit commitments not to train models on your data. BriefCatch's AI features are completely optional and user-controlled, with zero data retention to protect your work product.

AI outputs must align with legal practice guidelines. Verify every citation, review every legal argument, and ensure every factual assertion is accurate. Treat AI-generated content as a rough draft that requires thorough human review.

The key points are straightforward: maintain control, protect confidentiality, verify accuracy, and exercise professional judgment. These ethical standards aren't new—they're the same principles that have always governed legal work. AI just requires you to apply them in new contexts.

If you're looking for tools that respect these ethical boundaries, BriefCatch offers AI-powered editing suggestions with enterprise-grade security and zero data retention. Our platform helps legal professionals refine their writing while maintaining full control over when and how AI assists their work. Try our free trial or book a demo to see how responsible AI can enhance your practice without compromising ethical standards.

.svg)

.svg)

.svg)

.svg)